We have been always looking for the best ways to architect our applications and platforms so they are maintainable, extensible, observable, adaptable and easy to evolve (this is by no means a complete list of the properties we would like to have but the foundational ones). Layered architecture, component-based architecture, hexagonal architecture, microservice architecture (and many others) advocate to use great design principles and guidelines to achieve these goals.

For example, layered architecture suggests to have clear separation between different layers (persistence, services, caching, presentation, ...) with a strict rules imposed on how layers could talk to each other. Component-based architecture, quite widespread in the world of UI development but not limited to it, introduces the components (which internally may be built on top of layered architecture or other architectural style) as key building blocks of the applications or system.In turn, hexagonal architecture goes even further by introducing the concepts of ports and adapters around the application core. Last but not least, microservice architecture, the hottest architectural style these days, advocates to structure the application or system as a set of loosely coupled, ideally small, self-sufficient services which collaborate with each other.

Each one of the aforementioned architectural styles (as well as others we haven't mentioned) has own strong/weak sides and the context where it shines the most. But there are two kinds of architectures which beat them all, and those we are going to discuss right away.

Proximity Architecture

There is only one principle this architectural style offers: use anything you want anywhere you need. Do you need access to the service from data transfer object? No problem, just do it! May be your RESTful resource needs access to persistence services? Not an issue, go for it, because by and large, why to bother? It is just a pile of classes (usually within the same application), use static fields or methods, dependency injection or any other technique your language / frameworks provide. At the end, if compiler is happy, what is the problem? Right?

No, it is not right ... For sure, you have heard funny terms such as "big ball of mud" or "spaghetti code". Those are the typical outcomes when proximity architecture drives the show.

There are many reasons why proximity architecture emerges. Those reasons range from the constant urge to push features out, lack of knowledge and/or experience in software craftsmanship or the worst case, an ethical and careless approach to software engineering discipline. If you happen to have a startup experience , or worked for one of those large companies that still claim to be a startup to justify their chaos, I’m sure you have seen this form of architecture or one of its variations. Are they all doomed?

Well, I would argue, no, not really but fleeing the claws of the proximity architecture solely depends on the trade-offs you are going to make. For the developers, it is hard or even impossible to push back on the timelines set for the delivering of new features (unless you are exceptionally lucky and your manager understands what is technical debt and how to keep it under control). You have to and you will take shortcuts. However, it is enormously important to strive for the right balance between pouring in hacky code (on top of bunch of other hacks) and going with quick / not the best option while keeping in mind how much harm it could do to overall application architecture (and how hard it would be to refactore / improve / reverse that later on). Undoubtedly, seasoned software developers are able to cope with that.

I know, it sounds pretty vague to be an actionable advice but every company is unique, there is no magic universal rule or recipe to stop proximity architecture from poisoning your applications. The one thing is clear though, if you let it in and do nothing, you are going to end up with the unmanageable codebase and the only route to take would be to rewrite everything from scratch.

Micromonolith Architecture

Microservices, microservices, microservices ... everyone talks about them ... You hear from left and right that if you don't do microservices, then you are stuck in stone age. And many actually truly believe that all their problems and issues could be solved with microservices.

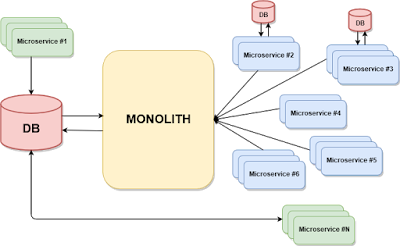

In many regards, microservices architecture could put life into stagnating projects assuming you are ready to embrace it across the whole organization, culturally and technically. This is a serious long-term commitment as such the changes won't happen overnight. But so many organizations fail to see the new beast rising here: micromonolith architecture in microservice clothing.

Here is what you could be sold as microservices architecture. Many organizations ended up with monolith and have no choice as to deal with that. Quality is terrible, productivity is going down, releasing new features takes months (or even years) ... and throwing more people into it does not help at all. So everyone tries to escape the monolith, whatever it takes, building standalone applications or services on the side, luckily terrific Spring Boot makes it as easy as falling off a log.

At first glance, it doesn’t look bad, after all they are calling it a microservice, and a bunch of things are calling each other, right? Except that this is not right at all. Applications and services that thrive in this kind of unhealthy ecosystem often do not own any data. They do not have sufficient knowledge to function independently and they do not belong to a concrete domain. These kind of services need to talk to the monolith to perform any useful function and in the worst case scenario, they share a database with the monolith and becomes a mere chatty proxy.

Why is that? Well, because in most cases it is very hard to migrate the large monolithic application to microservices architecture (the true one). And along with the migration, the organization should transform itself to become a fertile ground for cultivation of microservices, naturally. Not everyone is so brave to pull it off but the good news though, it is feasible. And here is why.

While building the monolith, the organization (hopefully) becomes an expert in its domain. There are tons of knowledge developed around the product, there are well-defined, well-understood use cases and flows, the domain-driven design might be of great help to formalize and capture all that. Once there is a solid understanding of what should be built, it is time to start cutting the pieces off the monolith. It is going to be a bumpy ride for sure and probably you won't be able to get from point A to point B directly (refactorings? splitting monolith into modules?), not to forget about evolving your testing practices (consumer contract tests? component tests? e2e tests?) along the way. But the rewards are worth the efforts.

Microservice architecture is a great one, it opens unlimited amount of opportunities and, when done right, could bring back the joy of development, could serve as a solid foundation for organization's software stacks, current and future ones. But neglecting its core principles would pave the road to frustration and chaos.

No comments:

Post a Comment